Developing client-server applications generally requires a skilled team of engineers, making the process complex and time-consuming. However, emerging technologies like ChatGPT have the potential to revolutionize application development, streamlining the process and enhancing efficiency. In this blog, we will delve into our experience with using ChatGPT3.0 to generate code for an Employee Portal application, highlighting the advantages and limitations we encountered along the way. Join us as we explore the power of ChatGPT in simplifying application development and empowering teams to create innovative solutions.

Harnessing the Power of ChatGPT3.0

Our development team, consisting of experienced backend and frontend engineers, embarked on the creation of an Employee Portal application. To streamline the development process, we harnessed the power of ChatGPT3.0, specifically utilizing the text-davinci-003 model developed by OpenAI. This advanced language model boasts exceptional natural language processing capabilities, enabling us to effortlessly generate complex code and significantly reduce development effort. Throughout this blog, we will share our firsthand experiences working with ChatGPT, uncovering its advantages in the application development landscape while also addressing its limitations.

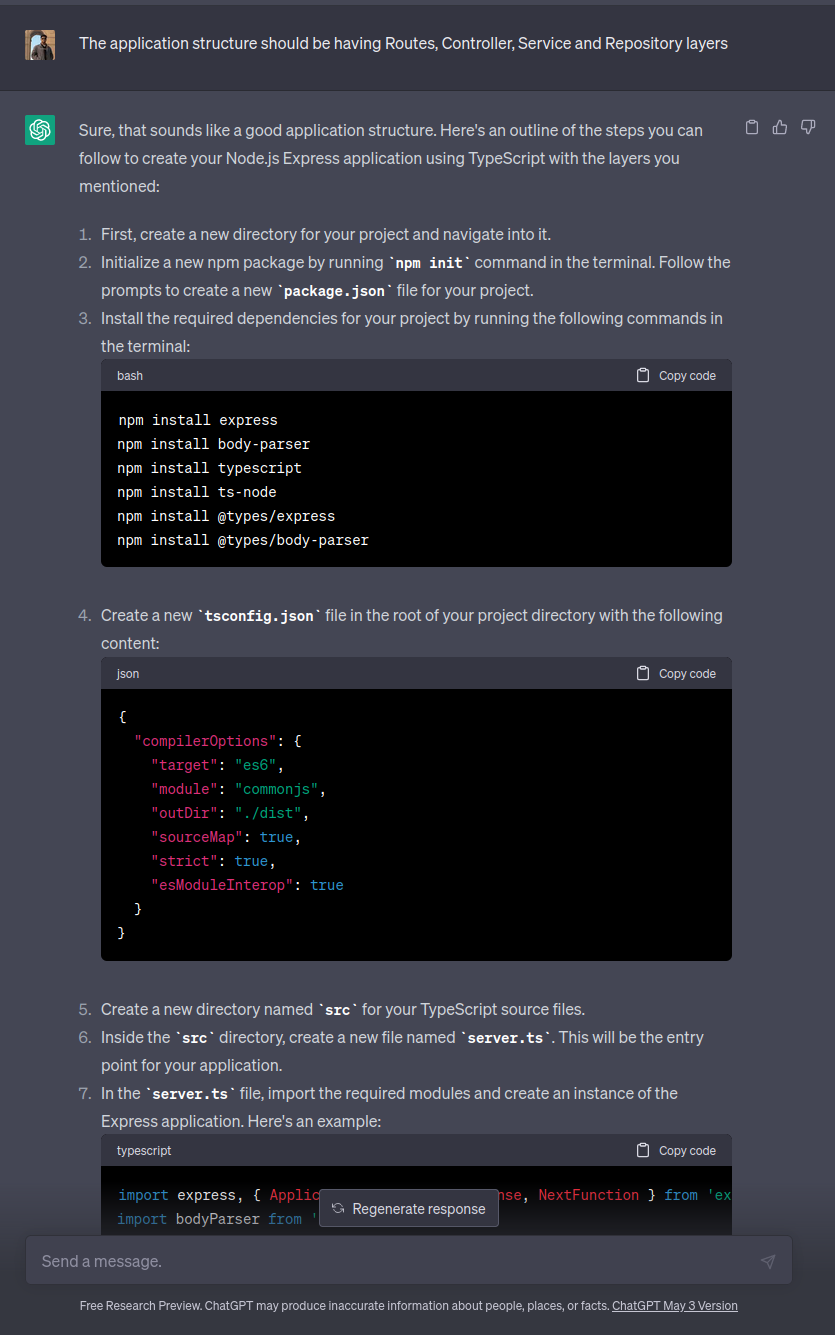

Choosing the Technology Stack: Typescript - NodeJS Express - Postgres

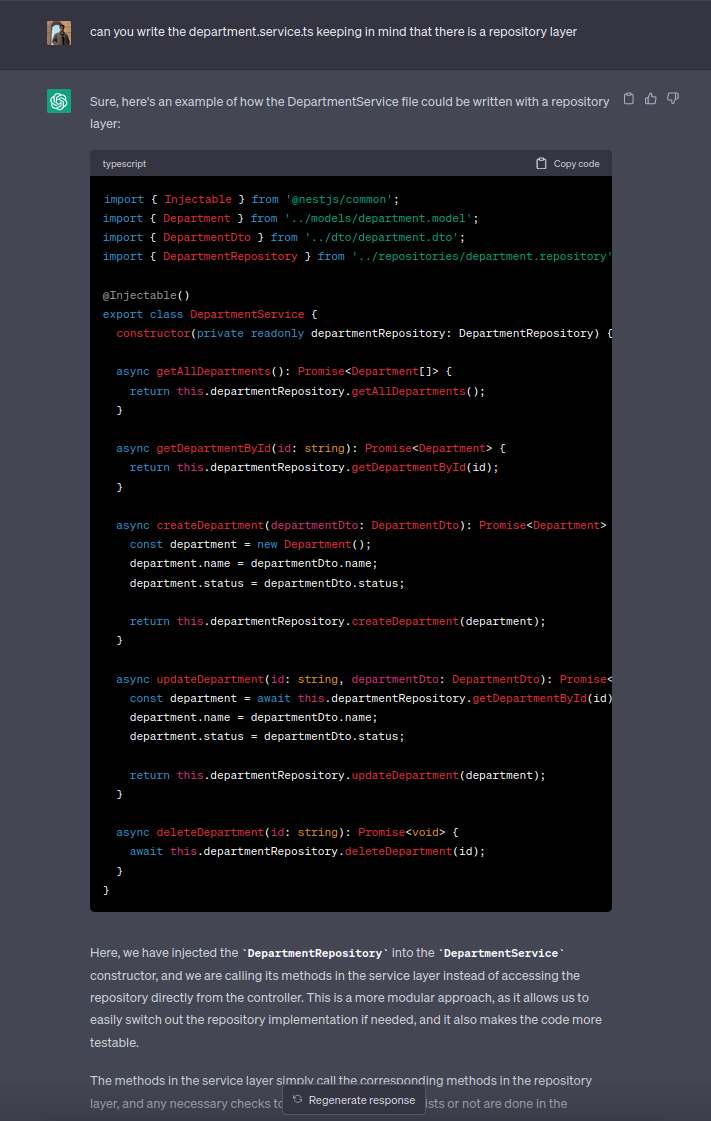

During our backend development with ChatGPT, we encountered both challenges and opportunities. To start with, we chose the Typescript - NodeJS Express - Postgres stack, successfully defining routes, controllers, and services. However, we faced a significant obstacle when the generated answers from ChatGPT were unexpectedly truncated, necessitating additional requests for the full answer. Moreover, ChatGPT would occasionally forget the context and provide random responses, posing a major hurdle in our pair programming experience.

Breaking Down Tasks into Smaller Sub-tasks

To overcome these challenges, we adopted a strategy of breaking down tasks into smaller sub-tasks and assigning them to individual team members. By prompting ChatGPT with specific sub-tasks, rather than overwhelming it with the entire context, we observed improved speed and precision in its responses. Dividing the boilerplate setup and controllers into separate prompts allowed ChatGPT to generate code for each component. By distributing the sub-tasks among team members, we successfully pieced together the application.

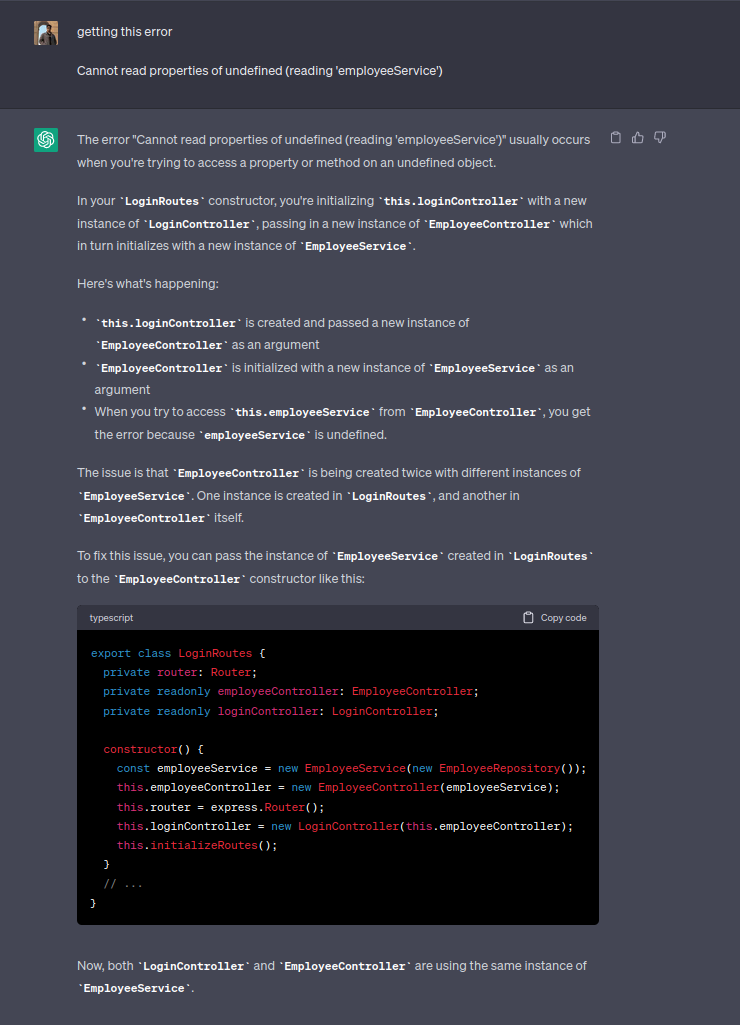

While ChatGPT demonstrated its ability to provide quick answers without a deep understanding of the context, we discovered its limitations. It occasionally deviated from the requested programming language/framework, leading to code inconsistencies. Although ChatGPT excelled in debugging and solving tricky errors, its effectiveness heavily relied on the prompter's ability to provide adequate context. Developers with a strong grasp of the language and framework were better equipped to leverage ChatGPT effectively.

Developers vs. ChatGPT: Who Writes Better Test Cases?

We also attempted to utilize ChatGPT for writing unit tests using Jest. While ChatGPT assisted in setting up Jest, it faced challenges when generating tests for individual files due to the context loss issue. The generated code sometimes lacked essential patterns, constructors, and variables, resulting in less than ideal test cases. When attempting to correct ChatGPT's responses by reminding it of the context, the generated code sometimes became even more erratic. At this stage, developers proved to be more reliable than ChatGPT in producing accurate test cases.

Challenges Faced and Lessons Learned

Our experience with ChatGPT3.0 in developing the Employee Portal application has been both enlightening and challenging. Leveraging this advanced language model has allowed us to streamline the application development process and generate code with minimal engineering effort. However, we have also encountered limitations, such as the context loss issue and occasional deviations from requested programming languages.

Despite these challenges, ChatGPT has proven to be a valuable tool in debugging and solving tricky errors, providing quick solutions based on common notions. It has significantly reduced time spent on certain tasks and empowered our team to focus on higher-level aspects of application development.

In the upcoming Part 2 of this blog series, we will embark on a comprehensive exploration of the application development process, starting from the front end and extending all the way to the deployment of the application. We will dive into how ChatGPT has influenced our approach in each stage of development and discuss the unique challenges and opportunities we encountered along the way.

Stay tuned as we continue to unveil the potential of ChatGPT in simplifying application development and fostering innovation throughout the entire development lifecycle. Make sure to subscribe to our blog to receive notifications when Part 2 is published. We are excited to share our experiences with ChatGPT in front-end development and provide deeper insights into the capabilities of this groundbreaking technology. Subscribe now for Part 2 and stay updated with our ChatGPT journey.